Statistical physics of disordered systems, information, and inference

Lectures: Johannes Berg

Exercises: Arman Angaji

Exercises: Arman Angaji

This lecture course gives a unified perspective on disordered

systems, information theory, and the statistical theory of

inference. These fields share a common methodology, namely the

entropy of typical and of rare configurations. Applications will be

taken from statistical modelling, machine learning, and

communication. Topics include

• introduction to probability and information theory

• information theory and the foundations of statistical physics, the principle of maximum entropy

• typical and rare events, the source coding theorem

• disordered systems: introduction to spin glass theory

• statistical inference: the basics of data science

• outlook: inverse problems, the inverse Ising problem

The course is part of the area of specialization "Statistical and biological physics" of the Master in physics. The course is self-contained; prior knowledge of advanced statistical physics (at the level of the Masters course) is useful but not required.

• introduction to probability and information theory

• information theory and the foundations of statistical physics, the principle of maximum entropy

• typical and rare events, the source coding theorem

• disordered systems: introduction to spin glass theory

• statistical inference: the basics of data science

• outlook: inverse problems, the inverse Ising problem

The course is part of the area of specialization "Statistical and biological physics" of the Master in physics. The course is self-contained; prior knowledge of advanced statistical physics (at the level of the Masters course) is useful but not required.

Times and places

Lectures take place Tuesdays 16:00-17:30 (alternating with problem classes)

and Thursdays 12:00-13:30 in Seminar Room 3 in the new theory building; the course will start on 4.4. All lectures will also be recorded and will be available for download on ILIAS.

Registration: If you are going to take this class for credit (this is most of you), please sign up on KLIPS2.0. Registering should automatically give you access to the relevant ILIAS page, which gives you access to the recorded lectures and the problem sets. Otherwise, connect to this ILIAS page and sign up for membership. Use the rider "Sprache" (language) on the top right to set the language to English. Let me know via email if there are technical problems or if you have questions.

There will be a bi-weekly tutorial class on Tuesday 16-17:30, also in Seminar Room 3 in the new theory building. There is also an HREF="https://www.ilias.uni-koeln.de/ilias/goto_uk_crs_5097200.html">advanced seminar advanced seminar accompanying this course.

Registration: If you are going to take this class for credit (this is most of you), please sign up on KLIPS2.0. Registering should automatically give you access to the relevant ILIAS page, which gives you access to the recorded lectures and the problem sets. Otherwise, connect to this ILIAS page and sign up for membership. Use the rider "Sprache" (language) on the top right to set the language to English. Let me know via email if there are technical problems or if you have questions.

There will be a bi-weekly tutorial class on Tuesday 16-17:30, also in Seminar Room 3 in the new theory building. There is also an HREF="https://www.ilias.uni-koeln.de/ilias/goto_uk_crs_5097200.html">advanced seminar advanced seminar accompanying this course.

Literature

Cover and Thomas, Elements of Information Theory (Wiley)

MacKay, Information theory, Inference and Learning Algorithms (CUP)

Mézard and Montanari, Information, Physics, and Computation (OUP)

MacKay, Information theory, Inference and Learning Algorithms (CUP)

Mézard and Montanari, Information, Physics, and Computation (OUP)

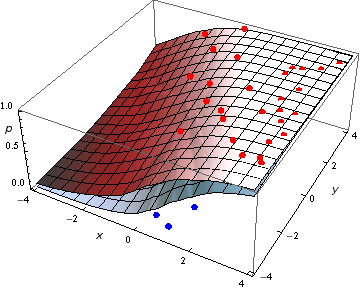

Picture: Confidence region for the family of support vector machines endowed with hyperbolic tangent profile function, by B. Apolloni, S. Bassis, D. Malchiodi, CC BY-SA 3.0, via Wikimedia Commons